Luciano van Waas

Level Designer

For Starters...

Project Roles and Responsibilities

Game Description

General Information

“Investigate strange oddities in Ornament Express, where you’re hired to recover your client’s belongings from an eccentric thief and his train of stolen artifacts. Explore a cabinet of curiosities throughout the train, visit carts holding odd puzzles, odder objects, and find hidden away secrets.”

Hop aboard Ornament Express, a Virtual Reality puzzle game, where you take the role of a Private Investigator in the 1900’s in Switzerland.

You’ve been hired by your client after a crime streak saw her valuable curio stolen. The trail this eccentric thief left behind leads you to a train, filled with stolen artefacts and mysterious items. Can you find the client’s stolen goods?

In this game you’re tasked with sneaking through a moving cabinet of curiosity, the hideout of a fanatic thief obsessed with the strange and weird, looking for what he stole from your client. Stuck behind a series of puzzles and locked in the train, you’ll have to rummage through drawers and clutter, solve the puzzles laid out in the carts and codes to continue progressing through this strange hoard of bits and bobbles.

Find all the ways in which you can create chaos in the once pristine train cart, messing up the hideout of the thief as much as you can! Pull open drawers, scatter papers around and play with the many souvenirs the thief has collected. The train becomes a captivating playground of disorder, showcasing the aftermath of your relentless pursuit to recover the client’s precious curio.

Ornament Express is a VR puzzle game inspired by entertainment media such as The Polar Express and Murder on the Orient Express. This project took 9 months to develop and was made by a team of 18.

18 Developers

9 months

Developed in Unreal Engine 5, Released on Steam

As for my personal roles and responsibilities within the team, I was the only level designer for the majority of the project’s duration, as well as QA lead and one of the more prominent testers. I was also in charge of playtesting for some time.

During the concept phase, I was in charge of sketching out gameplay moments, as well as designing the main puzzle of our first playable.

During the pre-production phase, I was in charge of creating the initial layout and overall player experience of the first level, also known as the nature cart. Sometime in development, the idea of a second cart was pitched. I also ended up being in charge of this cart and its design. However, due to me being the only level designer on the team at the time, and being unable to work on two carts at once, the second cart, also known as the Space cart, had to be scrapped. It was unfortunately never brought back, but did manage to reach a state of playability. It just never got to the point where we felt like it reached the same, or at the very least a similar level of quality as the Nature cart. Quality standards are there for a reason.

During the production phase, I was in charge of rehashing the Nature cart. This is also where my duties as QA lead really started to take off. For example, I created a handful of templates for the team to fill out, one of which being a bug reporting template, and onboarded them onto the idea of reporting bugs and issues as they come by. I also created a massive test plan for me and the other qa lead to go through on a weekly basis. This involved us testing every single aspect of the game, going over things like functionality, performance and visuals.

During the release phase, I essentially became full-time QA tester. This meant no more level design and thus, more time to test and make sure the game lives up to our quality standards. During this time, I ran full test plans three times a week, created automated tests, helped fix emergent bugs and helped create presentable builds for the public to enjoy. Achievements were also added during this time, which also had to be tested continuously.

The QA Process

Functionality Test Plan

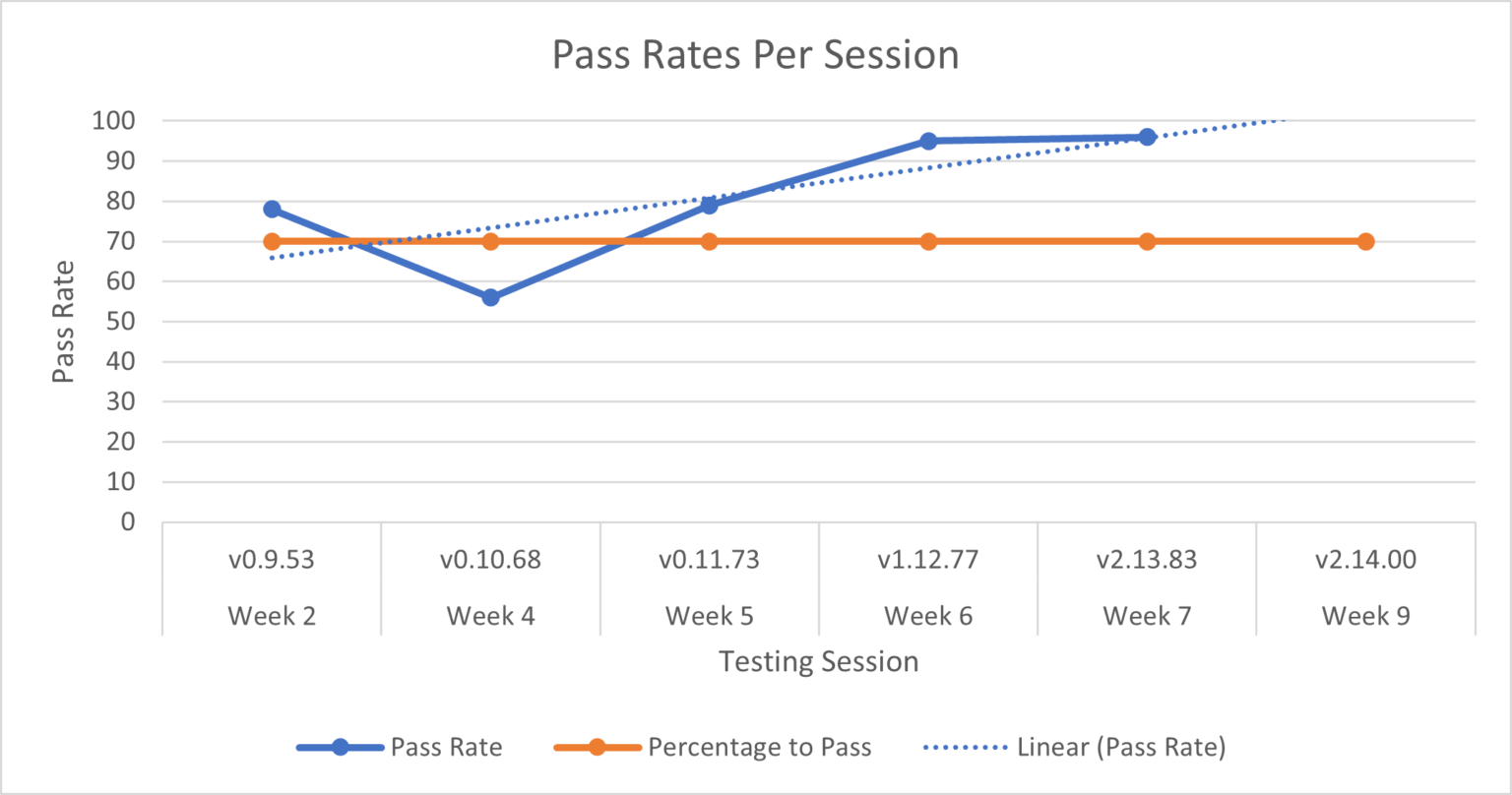

Pass Rates and Quality Standards

Even more excel sheets

My responsibilities as QA lead

To get right into things, this is Ornament Express’ functionality test plan. It covers 99% of what a player is able to do, see and interact with in the game. This means a wide range of collision checks, functionality checks, (audio)visual checks, lighting checks, spelling checks, and so on. It was updated almost every day, covering both major and minor updates, and was used 2 to 3 times a week once production had started

As time went on, I started onboarding the rest of the team onto its usage. This meant being able to delegate certain sections of it to other people–taking some of the workload off my shoulders–as well as having a second pair of eyes ready for whenever I inevitably made a mistake or ended up missing something.

During these testing sessions, I’d take notes and grade the game’s/build’s individual components based on what state they were in at the time. This ranged anywhere from “Missing” to “Presentable”. More importantly, this allowed me to create tasks for the rest of the team. An example: “As a result of yesterday’s functionality testing, I can conclude that the door at the end of the Nature cart does not open after solving the final puzzle.” This bit of collaboration between me and the dev team allowed for quick and easy fixes, as well as constant line of collaboration and communication between QA and the rest of the team.

Throughout production, I also encouraged the dev team to report bugs as they come by, using my template to avoid confusion and invalid reports. While it was certainly a struggle getting anyone to care about QA when all they really wanted to do was work on their own little features, it eventually worked out. Reports were being made, I corrected them as I saw fit, and the workload was finally spread across the entire team, rather than just me; Bug reports are supposed to be simple, straight to the point and as objective/observative as possible. No assumptions, as this may lead the dev that’s tasked with fixing it down the wrong path.

Lastly, I used the results of these test plans to calculate how much of the game (in percentages) was presentable. I then set a quality standard for the team and future builds to prevent faulty and/or underdeveloped builds to go live. As demoralising as it may have been for the dev team to spend a whole sprint on a feature or a level, only for it not to go live in the end, it is also important for the project’s reputation to not push broken, unplayable or faulty builds. Being the bearer of bad news does not matter to me if it means the project benefits from it in the end.

Like I always say, we work for the project, the project doesn’t work for us.

As the weeks went on and we got closer and closer to release, the game got more and more polished. There are two reasons for this. For one, I made it so that the percentage to pass kept going up as we got closer and closer to release (although not reflected in the graph above). This uptick in pass percentage was ultimately implemented because builds were passing each and every time by default. Let’s say a build was already 75% presentable last time it was tested, even without any further fixes, it will still pass because it was already 75% presentable before. So, in order to motivate the team to keep on polishing and to deliver a product we can truly be proud of, I increased the percentage to pass with almost every build, even if just by 5%.

Secondly, little issues were found past the initial dip we had around week 4, or build 0.10. In between week 4 and 5, a massive list of bugs and issues was reported to Jira in order to make sure they got fixed. This list was then worked on for the remainder of development. Issues not reported during this time–for instance, issues found after week 5–were usually immediately tackled. This is because, after that dip in Week 4, we made sure to be a lot more strict as to what gets and what doesn’t get to be pushed to perforce. Unsurprisingly, this fixed most of our issues, and thus only left us with that previously mentioned bug list.

At our peak, we managed to reach a 96% pass rate, meaning only about 4% of the game was bugged or underdeveloped in the end.

The Excel sheet pictured above shows an overview of all actors placed within the build. It was made with comfort and clarity in mind. Namely, it states whether or not any given actor has a hand pose (for when the player grabs it), a weight value (for when the player drops it, as well as for one of the puzzles in the nature cart) and a fade-out (for when the player sticks their head inside of it). Some actors need a fade-out, whereas others don’t; some actors don’t need a weight value, as they physically cannot be moved or grabbed, and so on.

As more and more actors got added to the game, this started becoming really confusing and hard to keep track of. This is where my excel sheet came into play. I made an overview of every last fade-out, hand pose and weight value found within the game. This allowed us to keep track of what still needed to be worked on, and allowed the dev team to update and use it as they saw fit.

This, alongside the previously mentioned test plan, automated test cases, Perforce pipeline, our Jira-based bug list and so much more made for a really nice, well-monitored QA process.

● Help maintain a testing pipeline. (Taking care of and being able to troubleshoot/maintain the Jenkins script made by Melvin Rother, the other QA lead)

● Set up and maintain test plans. (Setting up (smoke) test plans, onboarding others onto the process and continuously find ways to innovate and iterate upon said test plans in order to increase productivity and save time)

● Set up and maintain a bug list to ensure overview. (Setting up a bug list in Jira, creating templates for the rest of the team to use (such as a bug reporting template), onboarding the rest of the team onto the bug reporting process by creating tutorials, writing out example reports and hosting relevant meetings, reporting bugs, ensuring bugs also get reported by the rest of the team and correcting faulty bug reports)

● Make QA reports and actively share them. (Doing weekly QA sessions and writing up a summary of what I found, as well as creating tasks for the rest of the team following those summaries and reports)

● Host bug fixing and QA sessions. (Host a meeting at the end of every sprint (or more if necessary) where we sit down as a team and watch someone play the game as we take note of and report all the bugs they come across in order to, firstly, collectively go over the current state of the game, and secondly, to get the team to be a bit more involved with the QA process as a whole, as some found it difficult to get into. These meetings also helped take some of the workload off my shoulders).

● Be able to ensure quality by the time the product is released. (Set quality standards for the team to adhere to, help set up perforce pipelines to make sure faulty content does not get pushed, test multiple times a week to be able to monitor the state of the game (then forward that information to the devs), and create pipelines, templates and processes to be used by both yourself as well as the dev team. For example, the functionality test plan, the previously mentioned actor overview and the bug reporting template and onboarding process.

● Help ensure Perforce is used as agreed upon. (This one speaks for itself).

A little bit of level design

Puzzle Design: The Concepting Phase

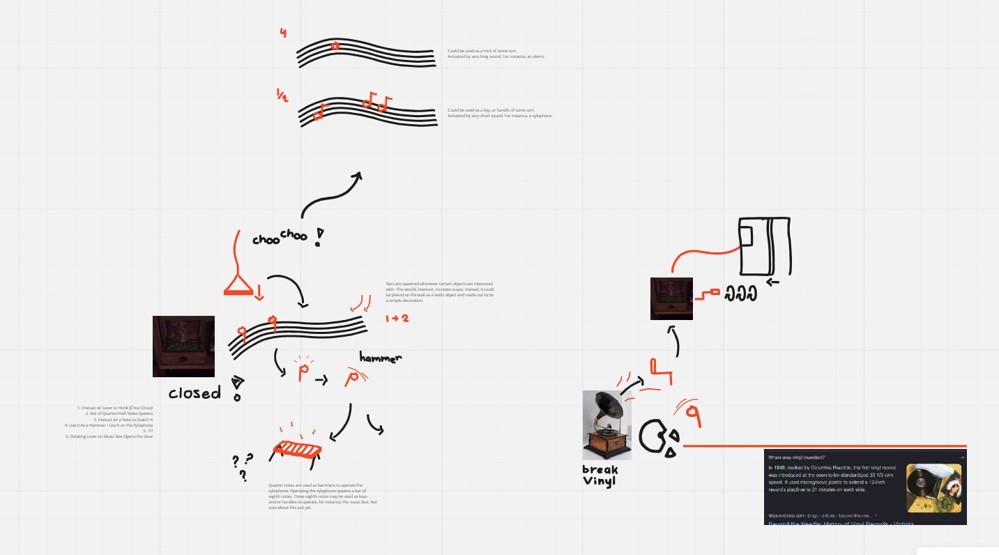

I proposed the above puzzle design to the team during the concepting phase. It was chosen to be the main puzzle featured in our final deliverable, at least for the first playable. Sprint planning revolved around implementing it in-engine.

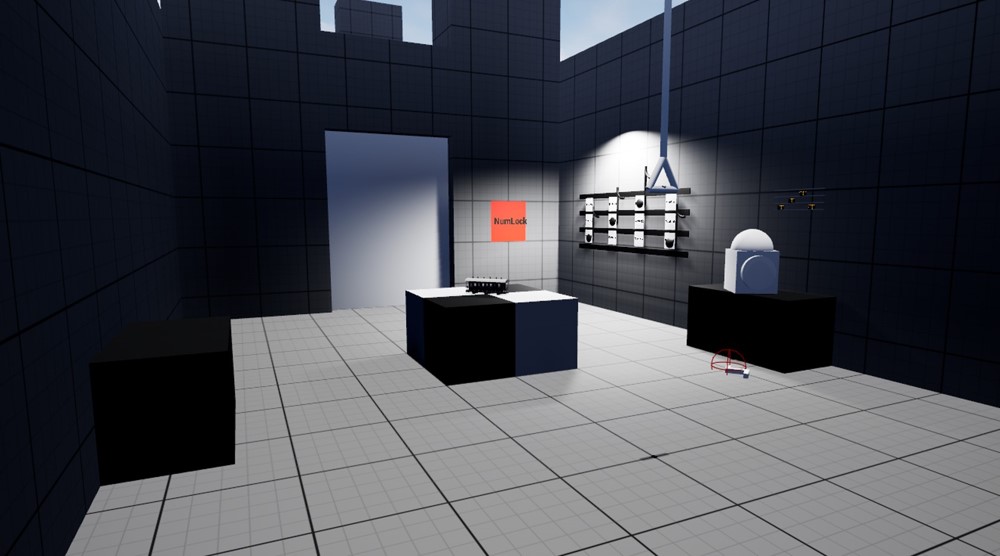

This screenshot shows the initial implementation of the puzzle. This was more of a proof of concept than a proper laid out puzzle.

This screenshot shows the iterated upon implementation found in the first playable. This puzzle was ultimately removed in pre-production, however.

Puzzle Design: (Pre-)Production

1st Iteration:

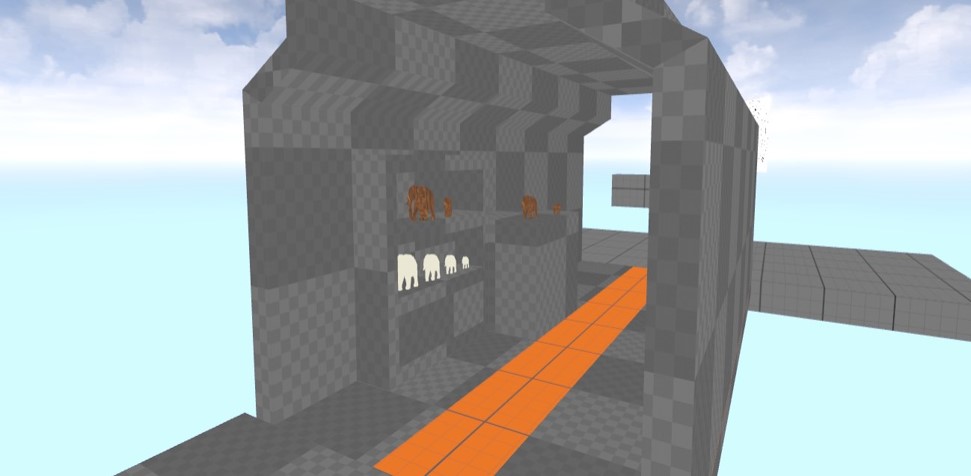

I’d been asked to create the underlying system behind the ‘Elephant puzzle’, as we called it. Nicolo (Programmer) and I came together to get the task done. He helped me program the NumLock and InputFields as it makes use of some of his previously established systems.

To quickly explain the puzzle, the player is asked to sort 4 elephant props by size. Once sorted in the right order, the puzzle’s reward is given to the player.

2nd Iteration:

After having experimented with the underlying puzzle for some time, it was time to create the environment in which the puzzle would take place in the future. This meant creating an accurately sized and spaced greybox for the train cart.

This train cart allowed me to plan out the general placement of both the puzzle pieces as well as the reward. This gave me the idea to scatter the elephants throughout the train cart. They act as a reward for other puzzles as well as exploration.

3rd Iteration:

The first playable. I was asked to create the pictured level from scratch, from start to finish, using, among other things, the rest of the team’s contributions such as newly added 3d models, textures, playthings and functionality.

As for the puzzle itself, it barely changed. However, the one change that was made was major. Rather than placing the puzzle pieces in order from the start, the pieces are now scrambled and scattered across the train cart (as mentioned previously).

4th Iteration:

The Final product. The elephant puzzle received one last iteration. Definitely not an unimportant one, however. Notice how in the previous iteration, the reward box/drawer was essentially placed on the ground. Through research, playtesting and common sense, I came to the conclusion that this can be straining and/or frustrating to some players. This, and the fact that the reward box used to be off-screen when looking at the puzzle, made me rethink the location of the reward box. It is now placed in the middle of the cabinet rather than at the bottom.

Takeaways

Takeaways

Proper pipelines and processes are important to the overall success of a team. It might feel a little tedious or like it’s ‘too much’ in the moment, but having these processes in place (and adhered to) can make all the difference. Outliers happen, but shouldn’t become the norm.

Pipelines and processes put in place must make sense in the context of the team. Sure, I could curate a test plan according to IEEE 829 standards, but no one’d understand it and it’d take ages to fill in. There’s a time and place for everything.

It might sound obvious, but it’s important to listen to the people that use your pipelines. In the end, it’s not about how great you think your pipelines are, it’s about how useable, flexible and sustainable it all is to the people that make use of it. For instance, if people aren’t reporting bugs, outliers aside, there’s a chance the bug reporting template is just too tedious to fill in.

It doesn’t hurt to ask for help outside the team.

Define roles, responsibility and rights at the start of the project to ensure reliability is outlined and clear to everyone.